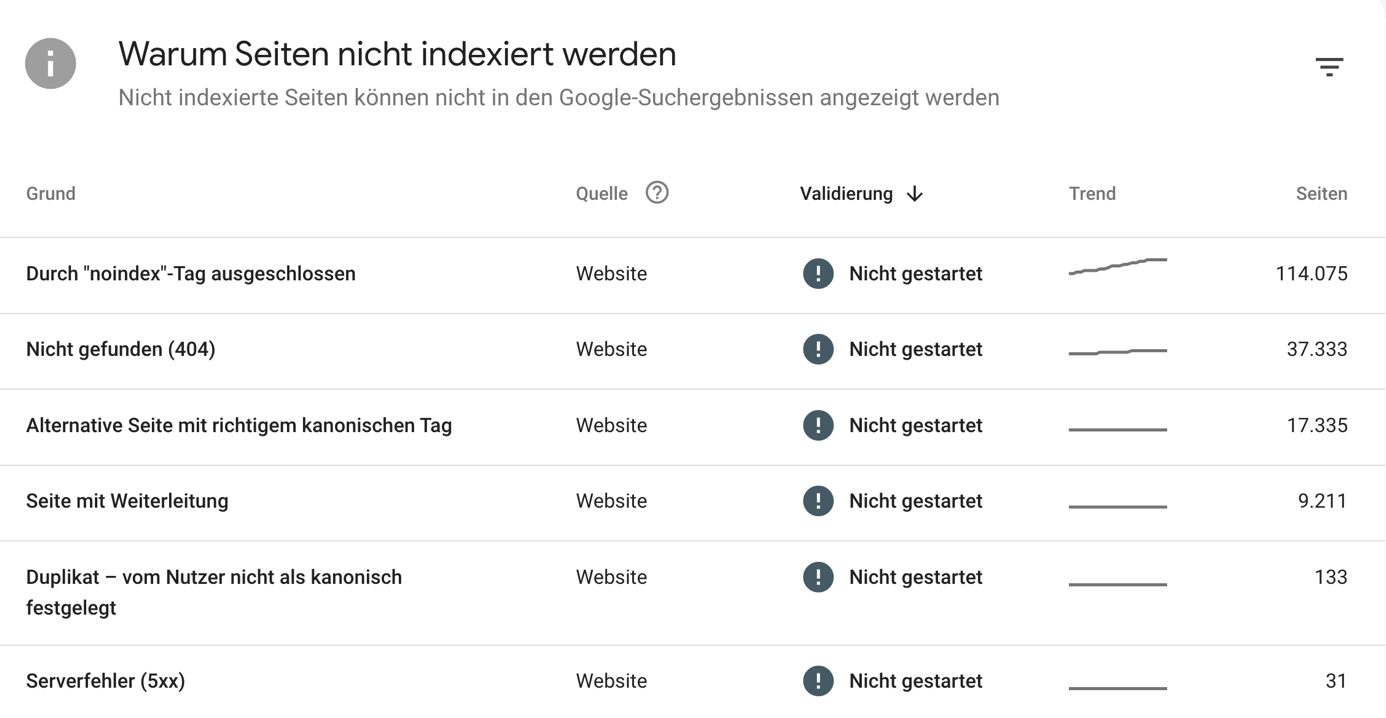

2,561 missing alt attributes, 978 duplicate title tags, 722 pages without H2 headers, 11,243 pages lacking meta descriptions.

Is this the end of my website’s visibility in search results?

No, not at all. Not every “problem” flagged by online marketing tools is a genuine issue, and not all data is equally relevant. When you understand how SEO tools work and how to interpret their data, they become powerful allies that significantly aid our efforts. However, if metrics and numbers are misunderstood or taken out of context, they can lead to wrong conclusions, wasting time and money.

In this article, we’ll explore some of the most common misconceptions about SEO tools, how to use them effectively, and how to accurately interpret the data they provide.

SEO Tools and Their Data

SEO tools excel at analyzing various aspects of a website and presenting the results in numerical form. These programs are unmatched in terms of both precision and efficiency. However, they fall short in interpreting these results accurately. It is our responsibility to determine the significance of the numbers and draw the correct conclusions from them.

While it is the tools’ job to generate data, it is our job to interpret it.

Without context-specific interpretation, the numbers are meaningless and disconnected from reality. Only those who understand the numbers and can evaluate their relevance can make the right decisions for necessary optimization measures. This article will focus on how to derive the right actions from these numbers.

Don’t Panic: Not Every Problem is Truly a Problem

The primary function of SEO tools is to identify errors. Therefore, they will find errors on almost every website. This can quickly unsettle beginners or website owners without a solid understanding of SEO, as they encounter numerous “warnings” and “errors” from various SEO tools. But how significant are these error messages really? Before panicking, take a deep breath and ask yourself:

- Which errors are relevant and which are irrelevant?

- Which problems must be resolved, which are merely cosmetic, and which are not problems at all?

- What actually impacts rankings and user experience?

- Lastly, how much effort is required to fix the error? Is it worth it, or is the effort disproportionate to the effect?

Warnings in Google Search Console

Let’s first look at arguably the most important tool available to webmasters and SEOs: Google Search Console. It is the only tool that provides data directly from Google. We recommend everyone who runs or builds websites to verify them in Google Search Console to access this valuable data.