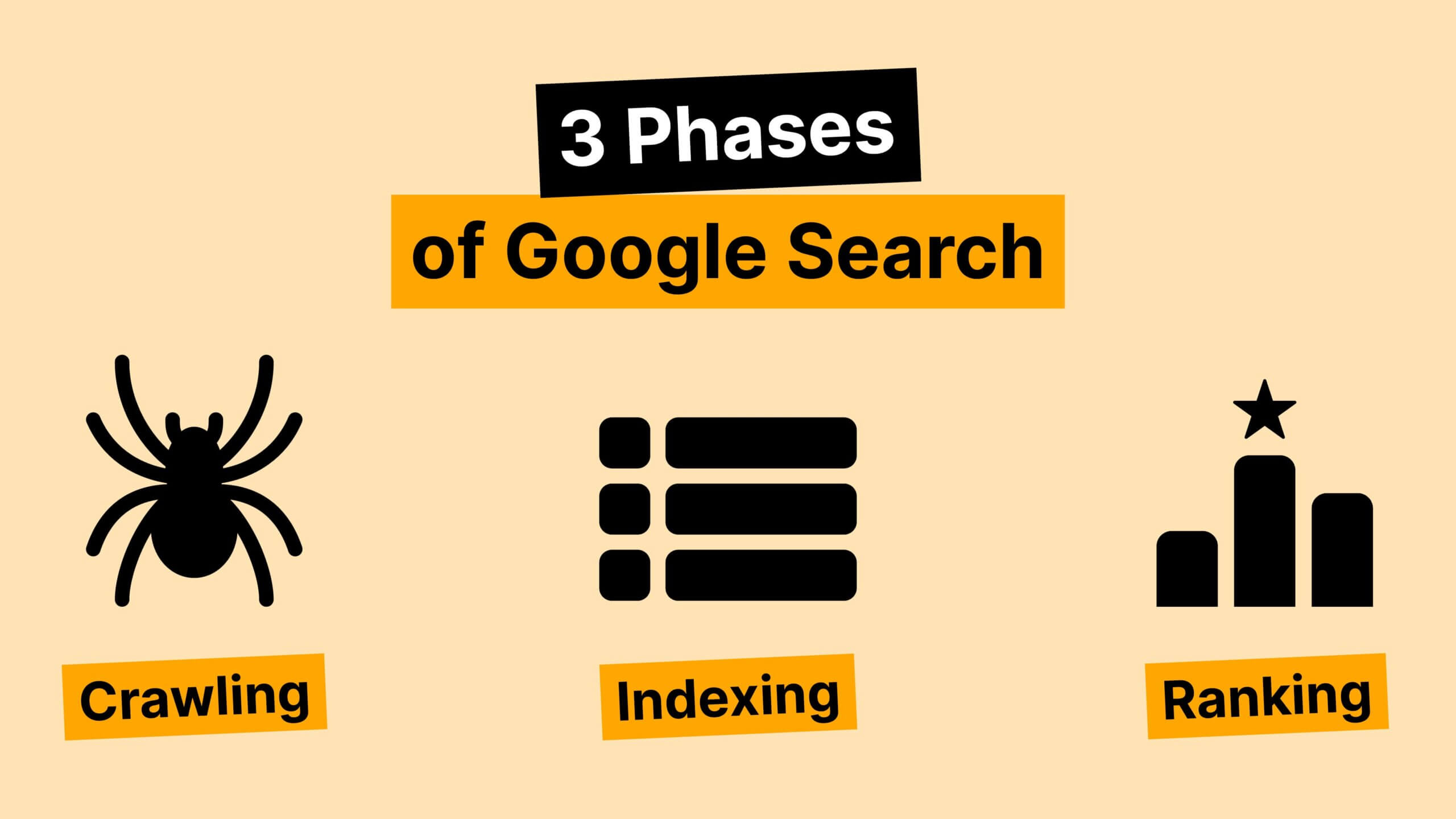

For a page to appear in search results, it must first be found. This is where the crawling process comes in. This is the first step in how search engines capture and evaluate content.

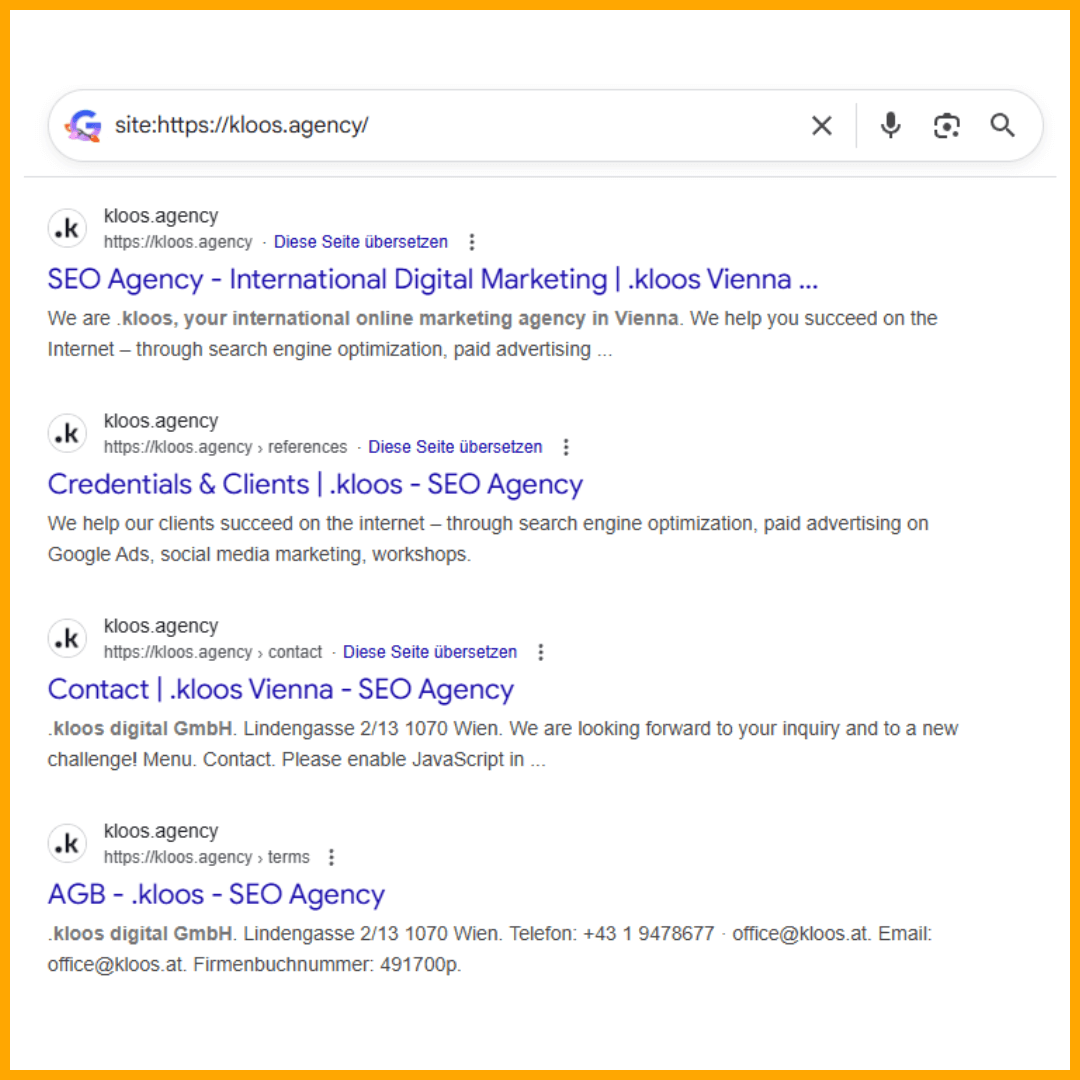

Search engines, such as Google, send automated programs, known as crawlers or spiders, onto the internet. The best-known is Googlebot. It begins with familiar websites and follows all the links it finds on these pages, similar to someone clicking from one Wikipedia article to the next. Each newly discovered URL is stored in a vast index. At Google, this index is called ‘Caffeine’.

A practical example: If a blog article is linked to by a well-known magazine, the crawler can access it. Without this connection, for example in the case of new pages without external links, the page may not be discovered, or it may be discovered with a delay. This is why internal linking is just as important as links from external sources.

However, not all pages of a website are crawled automatically. Crawling capacity per domain is limited. Google also prioritizes pages based on technical quality and relevance. Broken links, slow loading times, and unnecessary page variants (e.g. those with parameters) can result in valuable content being overlooked.

You can use the robots.txt file to define which areas of a website can be crawled. For instance, if you want to protect sensitive content (such as test environments) from public access, you can specify this in the file.